- Those who knew that S3 was down, and the internet itself was in crisis.

- Those who knew that some of the web sites and phone apps they used weren't working right, but didn't know why.

- Those who didn't notice and wouldn't have cared.

I was obviously in group 1, the engineers, who whisper to each other, "where were you when S3 went down".

I was running the latest hadoop--aws s3a tests, and noticed as some of my tests were failing. Not the ones to s3 Ireland, but those against the landsat bucket we use in lots of our hadoop test as it is a source of a 20 MB CSV file where nobody has to pay download fees, or spend time creating a 20 MB CSV file. Apparently there are lots of landsat images too, but our hadoop tests stop at: seeking in the file. I've a spark test which does the whole CSV parse thing., as well as one I use in demos as an example not just of dataframes against cloud data, but of how data can be dirty, such as with a cloud cover of less than 0%.

Partial test failures: never good.

It was only when I noticed that other things were offline that I cheered up: unless somehow my delayed-commit multipart put requests had killed S3: I wasn't to blame. And with everything offline I could finish work at 18:30 and stick some lasagne in the oven. (I'm fending for myself & keeping a teenager fed this week).

What was impressive was seeing how deep it went into things. Strava app? toast. Various build tools and things? Offline.

Which means that S3 wasn't just a SPOF for my own code, but a lot of transitive dependencies, meaning that things just weren't working -all the way up the chain.

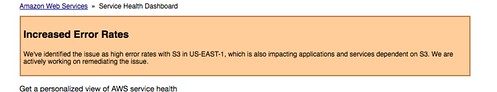

S3 is clearly so ubiquitous a store that the failure of US-East enough to have major failures, everywhere.

Which makes designing to be resilient to an S3 outage so hard: you not only have to make your own system somehow resilient to failure, you have to know how your dependencies cope with such problems. For which step one is: identify those dependencies.

Fortunately, we all got to find out on Tuesday.

Trying to mitigate against a full S3A outage is probably pretty hard. At the very least,

- replicated front end content across different S3 installations would allow you to present some kind of UI.

- if you are collecting data for processing, then a contingency plan for the sink being offline: alternate destinations, local buffering, discarding (nifi can be given rules here).

- We need our own status pages which can be updated even if the entire infra we depend on is missing. That is: host somewhere else, have multiple people with login rights, so an individual isn't the SPOF. Maybe even a facebook page too, as a final backup

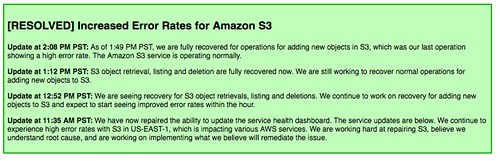

- We can't trust the AWS status page no more.

Anyway, we can now celebrate the fact that the entire internet now runs in four places: AWS, Google, Facebook and Azure. And we know what happens when one of them goes offline.